The Data Movement SDK is a Java library for applications that need to move large amounts of data into, out of, or within a MarkLogic cluster. For those familiar with the existing MarkLogic ecosystem, it provides the core capabilities of mlcp and CoRB in the vernacular of the Java Client API.

- Ingest data in bulk or streams from any Java I/O source, leveraging your entire cluster for scale-out performance

- Bulk process documents in situ in the database using JavaScript or XQuery code invoked remotely from Java

- Export documents based on a query, optionally aggregating to a single artifact, such as CSV rows

Integrating data from multiple systems starts with getting data from those systems. The Data Movement SDK allows you to do this easily, efficiently, and predictably in a programmatic fashion so that it can be integrated into your overall architecture.

Java is the most widely used language in the enterprise. It has a mature set of tools and an immense ecosystem. MarkLogic provides Java APIs to make working with MarkLogic in a Java environment simple, fast, and secure. The Java Client API is targeted primarily for interactive applications, such as running queries to build a UI or populate a report, or updating individual documents. Its interactions are synchronous—make a request, get a response. The Data Movement SDK complements the Java Client API by adding an asynchronous interface for reading, writing, and transforming data in a MarkLogic cluster. A primary goal of the Data Movement SDK is to enable integrations with existing ETL-style workflows, for example to write streams of data from a message queue or to transfer relational data via JDBC or ORM.

Set-up

The Data Movement SDK is packaged as part of the Java Client API JAR, such that an application can perform a mix of short synchronous requests and long-lived asynchronous work in concert. The JAR is hosted on Maven Central.

Add the following to your project’s build.gradle file:

MarkLogic publishes the source code and the JavaDoc along with the compiled JAR. You can use these in your IDE to provide context-sensitive documentation, navigation, and debugging.

To configure Eclipse or IntelliJ, add the following section to your application’s build.gradle file and run gradle eclipse or gradle idea from the command line.

Or for Maven users, add the following to your project’s pom.xml:

Writing Batches of Documents

WriteBatcher provides a way to build long-lived applications that write data to a MarkLogic cluster. Using the add() method you can add documents to an internal buffer. When that buffer is filled (that is, reaches the batch size configured with withBatchSize()), it automatically writes the batch to MarkLogic as an atomic transaction. Under the covers, the DataMovementManager figures out which host to send the batch using round-robin, in order to distribute the workload in a multi-host cluster. Thus a write batch will remain available in the event of a forest being unavailable, but not a host. A future release will accommodate host failures as well.

The example below creates synthetic JSON documents inline. In a real application, though, you’d likely get your data from some sort of Java I/O. The Data Movement SDK leverages the same I/O handlers that the Java Client API uses to map Java data types to MarkLogic data types.

The onBatchSuccess() and onBatchFailure() callbacks on the WriteBatcher instance provide hooks into the lifecycle for things like progress and error reporting. The following illustrates the general structure of how an application would employ WriteBatcher.

The above omits the details of the actual data that’s sent to the writer.add() method (★). The simplest implementation might look something like:

Querying Documents

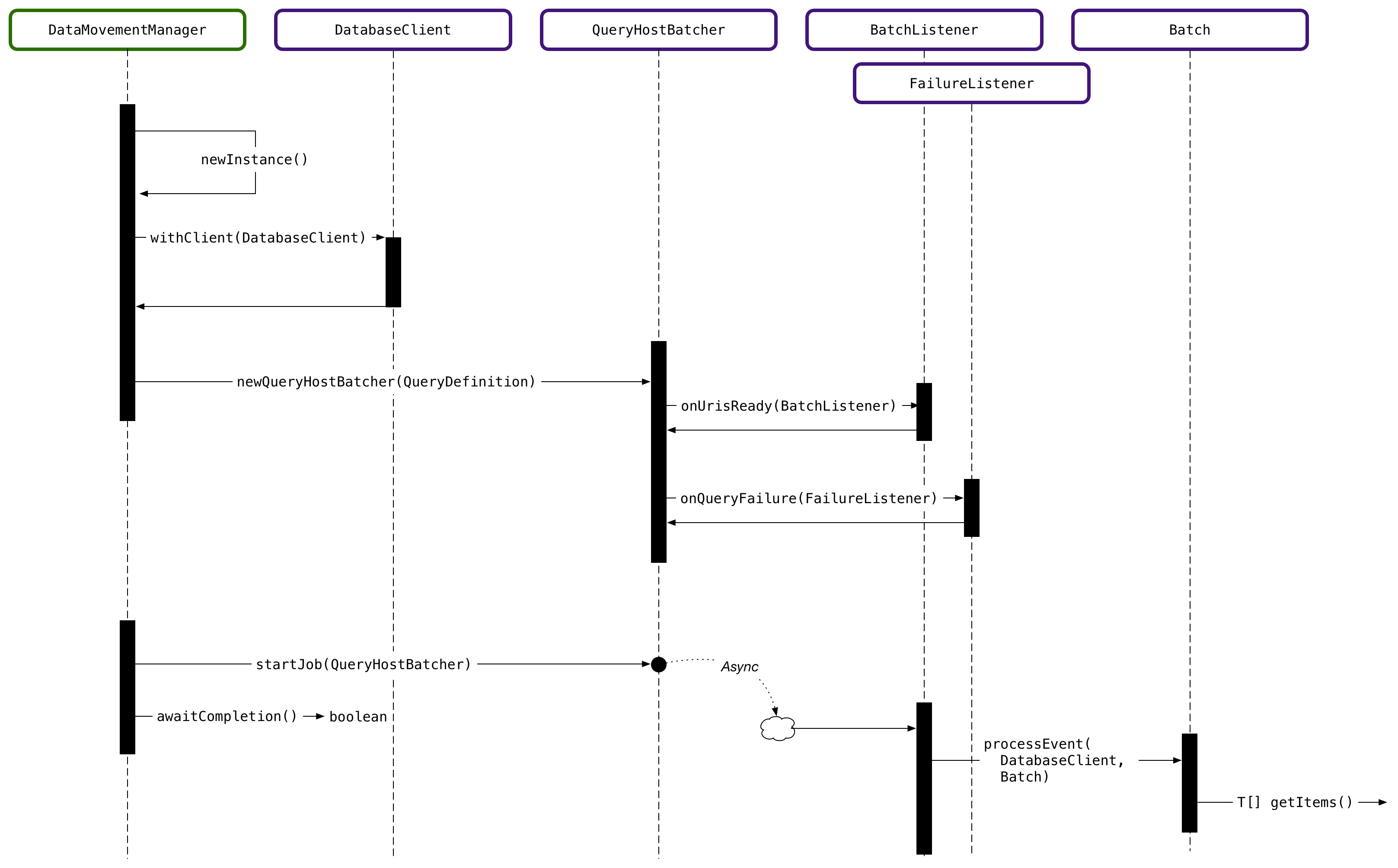

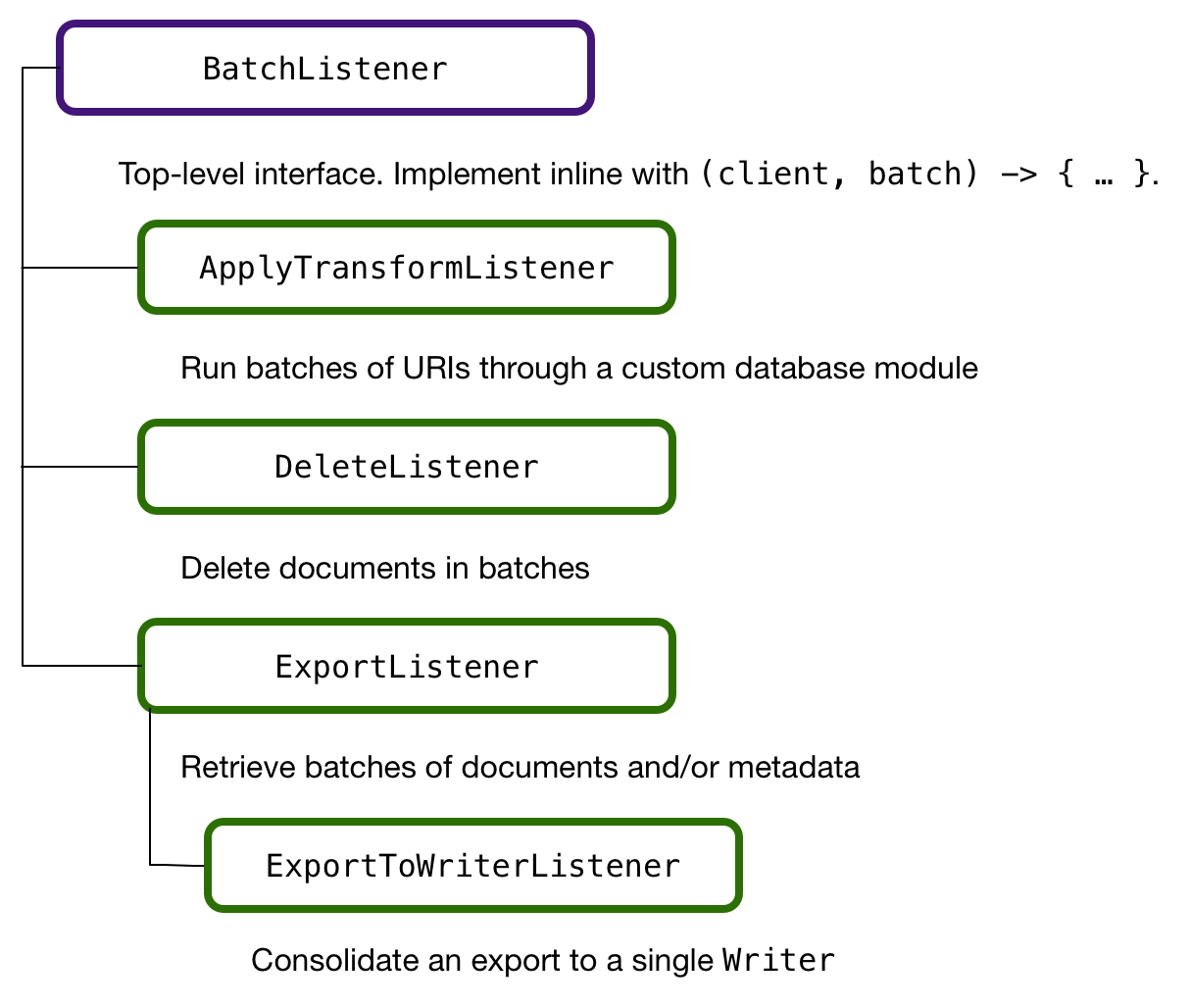

QueryBatcher is the entry point to accessing data already in a MarkLogic database. Its job is to issue a query to scope a set of documents as input to some other work, such as transformation or delete. You express the query using any of the query APIs available in the Java Client API, such as structured query or query by example. The onUrisReady() of QueryBatcher is the key. It accepts a BatchListener instance that determines what to do with the list of URIs, or unique document identifiers, that it receives by running the query. The DataMovementManager figures out how to efficiently split the URIs in order to balance the workload across a cluster, delivering batches to the BatchListener. A BatchListener implementation can do anything it wants with the URIs.

The first example below shows a BatchListener implementation that uses a server-side module named harmonize-entity to transform documents matching a query. The Data Movement SDK uses the same server-side transform mechanism as the rest of the Java Client API to manage and invoke code in the database. At a high level, this is similar to how other databases use stored procedures. However, MarkLogic transforms are implemented in XQuery or JavaScript and have access to all of the rich built-in APIs available in the database runtime environment.

The second example below shows how you’d use the DeleteListener, an implementation of BatchListener, to bulk delete the documents that match a query. Similarly, you can build your own BatchListener implementations.

Note the use of the withConsistentSnapshot() on the QueryBatcher instance above. This is important whenever it’s possible that the matched documents will change between batches, either as the result of work done in the query batch, such as batches that delete documents, as above, or if other transactions are changing data that matches your query. withConsistentSnapshot() ensures that all batches will see the exact same set of documents as existed at job start and that subsequent updates will not affect which documents match the query.

See Also

- JavaDoc for the Data Movement SDK

- Asynchronous Multi-Document Operations chapter of the Java Application Developer's Guide

- MLU On Demand Course

Stack Overflow: Get the most useful answers to questions from the MarkLogic community, or ask your own question.

Stack Overflow: Get the most useful answers to questions from the MarkLogic community, or ask your own question.